LLM Essay Evaluator

LLM tool for evaluating essays on local models

The Essay Evaluator automates essay grading using local language models and sentiment analysis with the DSPy framework. It provides detailed, objective feedback on grammar, structure, and content. This self-learning project includes both the evaluation logic and a user-friendly interface. Read more in the DSPy project guide on Medium.

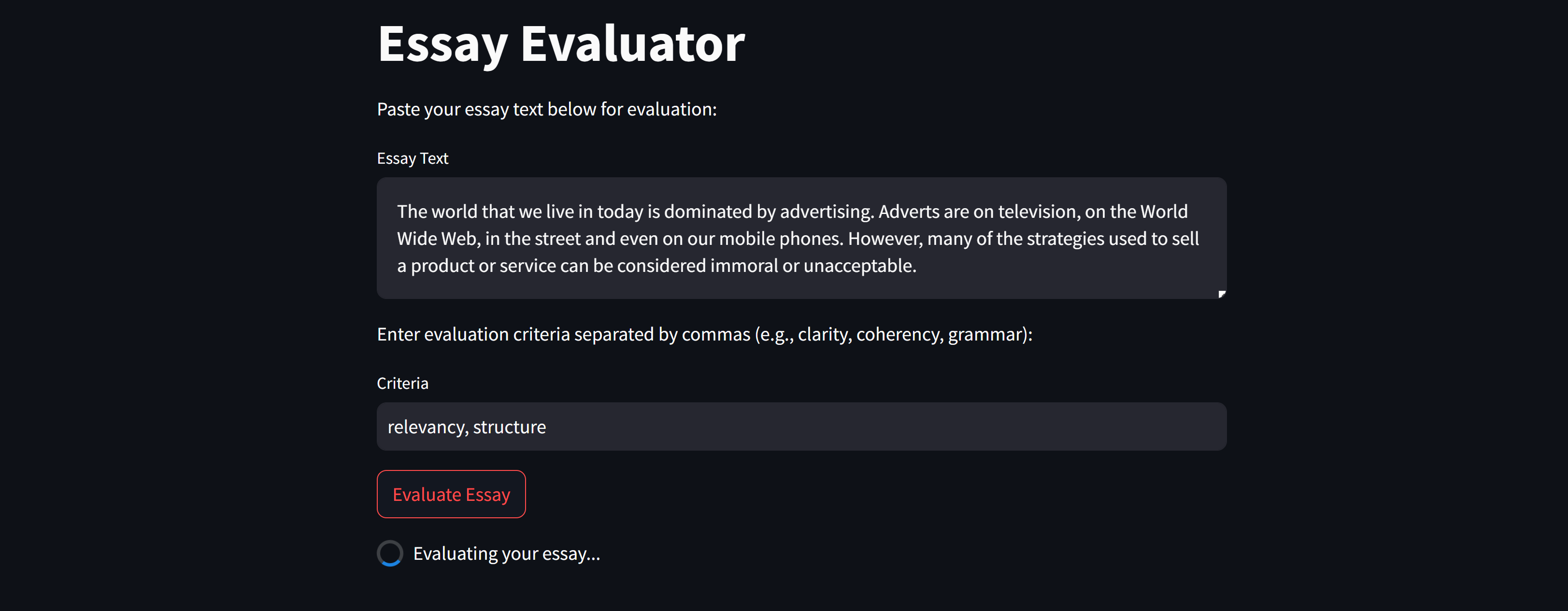

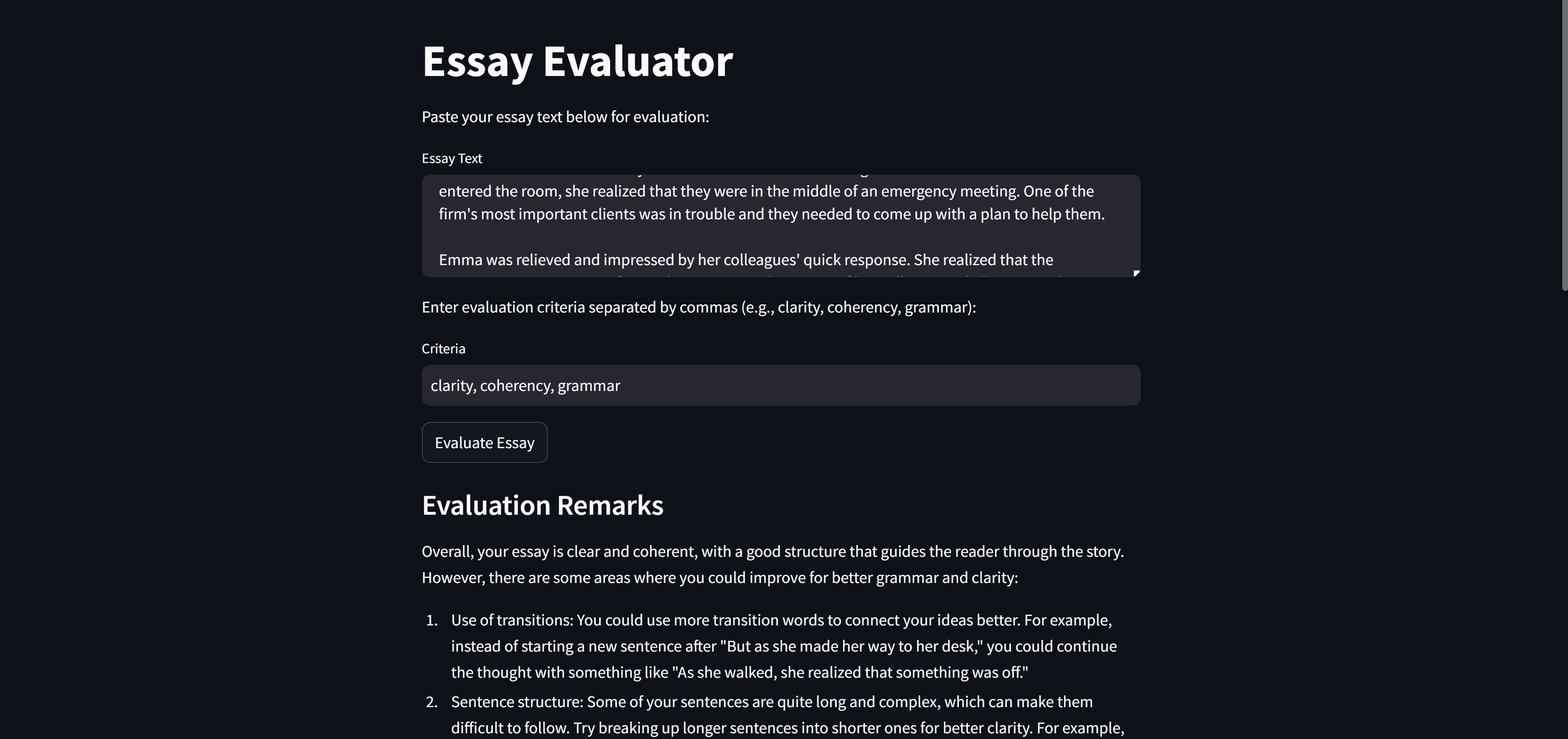

Live dashboard of Interface

Key Features

- Structured Feedback: To offer comprehensive feedback on various aspects of the essay, such as grammar, structure, and content quality.

- Automate Essay Evaluation: To reduce the time and effort required to grade essays by leveraging machine learning./li>

- Multiple Evaluation Criteria: Assesses content quality, structure, grammar, and argumentation

Technical Implementation

The system is built using modern NLP frameworks and tools:

- DSPy Framework: For structured interactions with language models

- Local LLM Deployment: Ensures privacy and reduces API costs using Ollama

- Sentiment Analysis: Emotion and bias detection

Workflow

- Text Input: Essay submission through the interface

- Preprocessing: Text cleaning and structure analysis

- LLM Analysis: Multi-criteria evaluation using local models

- Feedback Generation: Comprehensive report with improvement suggestions

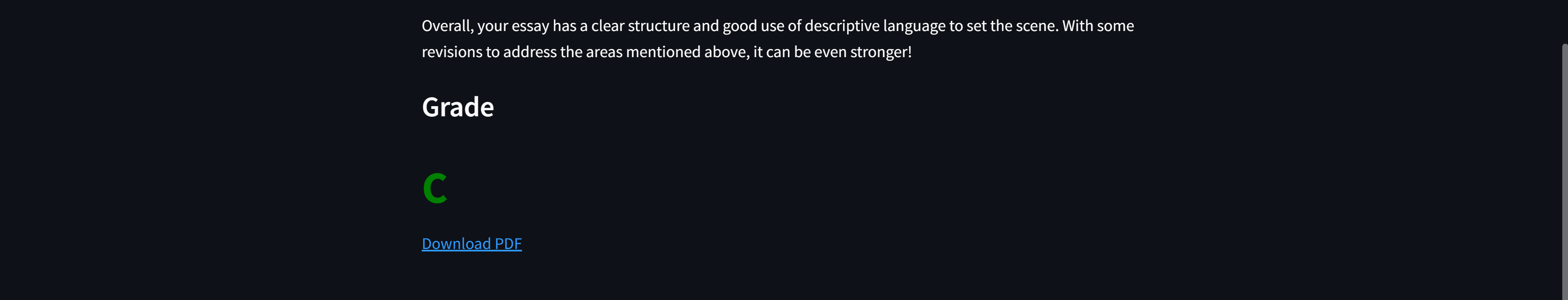

- Score Assignment: Quantitative grade across evaluation criteria

Multi-Criteria Evaluation

Grade and Evaluation Downloadable

Conclusion

The Essay Evaluator using DSPy represents a significant step forward in automating and enhancing the essay grading process. By leveraging state-of-the-art language models and sentiment analysis, it provides comprehensive, objective, and instant feedback, making it a feasible tool for educators and students alike.

For more details and to explore the implementation, visit the GitHub repository.